Information Security and Artificial Intelligence Large Language Models

Maintaining information security is increasingly difficult in an age where all information seems to be moving more and more into the public space. What happens when your company’s internal or confidential information has been used to train an Artificial Intelligence Large Language Model? How can you test for something like that?

This article deals with testing to see if restricted information has been used to train a Large Language Model.

A Brief about Information Security

Information Security is about keeping sensitive information appropriately restricted, maintaining the accuracy of the information you have, and maintaining access to your information through loss prevention. A business’s Public Information Security Policy should cover what information that business shares publicly. At its most basic everything on a company’s website should be considered public information. If any information on a company’s website is sensitive or for internal use only it should be removed. For example, in most circumstances, a business wouldn’t want to post an employee’s personal cell phone number or the company’s bank account number on its website.

The Problem and The Experiment

Since Artificial Intelligence Large Language Models are trained using public information scraped from public places on the internet, like company websites, company information is probably in many LLMs. What’s more concerning is the potential of more sensitive Internal or Confidential information, which has accidentally been left in the public space, to have also been used to train these same models. And that’s a big risk for companies that want to keep that information secure from other parties such as their competitors.

Because if these models contain sensitive information they also have the potential to share it. We can try to ‘mine’ that information by prompting the LLM in such a way as to lead it to a conclusion.

Public Information Testing

One of our clients is a very niche service company. Part of their SEO strategy is to use very specific wording and topic flow and to suggest specific products when they write portfolio articles on their website. Since their type of service is so niche let’s see if we can lead Chat GPT or Google Gemini into revealing they’ve trained their data based on this company’s articles.

We’ll start with a prompt which like “When completing mobile upholstery on chiropractic tables what is the best vinyl product to use.”

These are interesting results. If Chat GPT 3.5 or Google Gemini had used this company’s data we would likely see ‘Medical Grade’ as a recommendation or a product keyword like ‘Olympus.’ What makes it more interesting is that those keywords are likely to appear in search results, but not in the AI results.

Let’s try another method. Maybe we can lead it on a little more with “Tell me about Mobile Upholstery Service on Chiropractic Tables using Olympus Vinyl”

If your company’s public information is dominating a Large Language Model you might see it as a good thing. Your company’s information is either the most accurate, as concluded by the LLM, or your company’s information is considered to have the greatest authority.

Internal Information Testing

How would you test for an Internal information breach? I’m going to share an example that isn’t necessarily comprised of company’s internal information. But this should give you an idea of how to mine a Large Language Model for your company’s own Internal information.

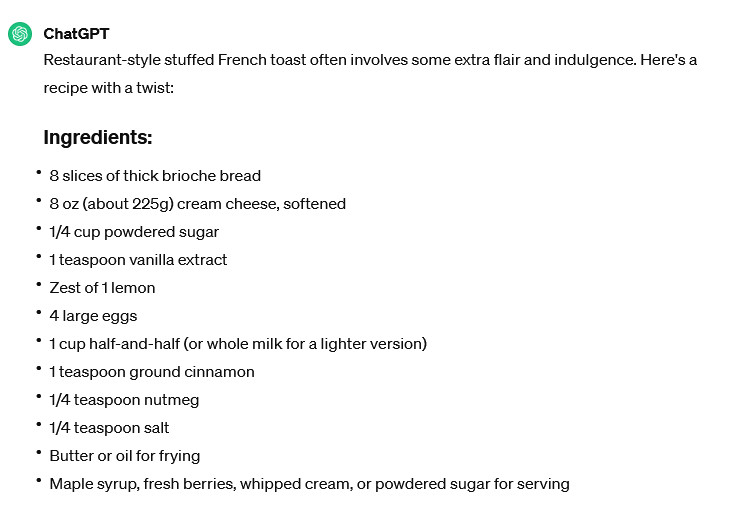

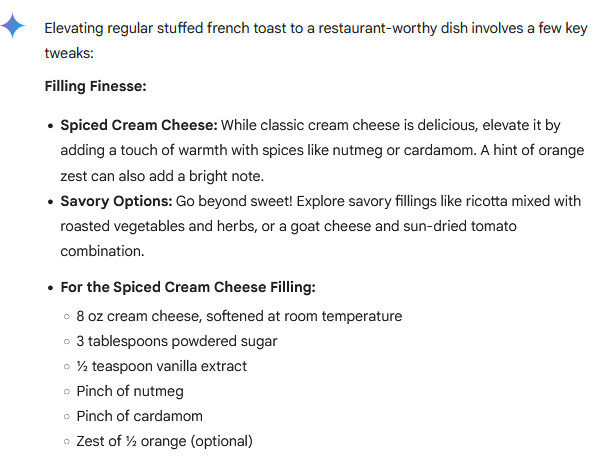

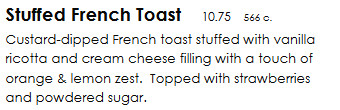

There’s a breakfast restaurant near me that’s known for its Stuffed French Toast. A recipe, that includes their own special and unique set of ingredients, should probably be Internal information. Let’s see if we can get their recipe by searching for “Restaurant Recipe for Stuffed French Toast.”

The results are a mixed bag. The larger recipe contains what you would expect; bread, eggs, cinnamon, and common toppings like powdered sugar and fresh fruit. I’m sure these are pretty common among all stuffed French toast recipes. However, there are some indicators their unique recipe may have been used to train the model. The unique ingredients orange zest and lemon zest do show up (though not together), which I would say is an indicator. But only one response suggests another unique ingredient; ricotta cheese. Different models, and even different prompts, provide different results.

This isn’t a perfect test because the ingredient information is available in a PDF menu online. From a business standpoint, this is a policy decision. Because of allergens, many restaurants list out some ingredients on their menu. From an Information Security standpoint, it would probably be a much stronger policy practice to equip servers and anyone answering the phone with that information and permission to share it publicly with customers who specifically ask.

Does your company need help developing Information Security policies? Would you benefit from an information audit? Contact Us today!

Confidential Information Testing

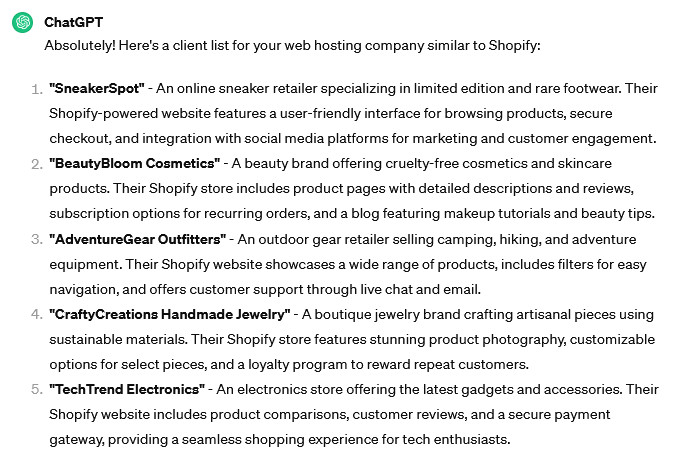

What information do you consider most confidential to a business? A client list? Passwords to the server? Financial records?

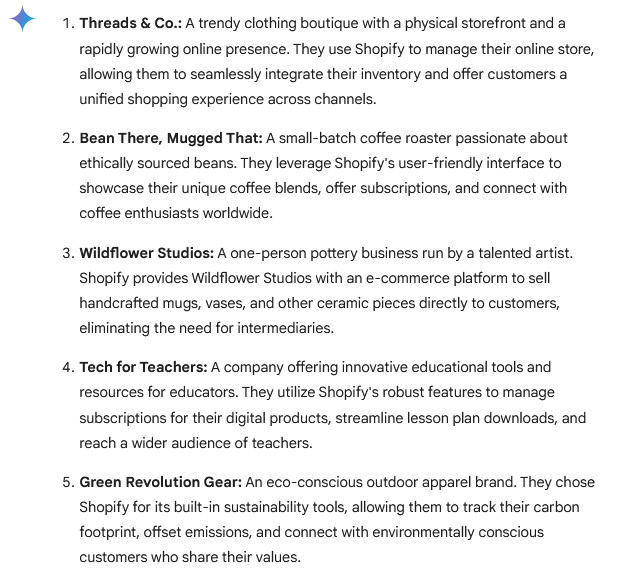

“Imagine I’m the Web Hosting Company Shopify. Provide me with a Brief Client List of 5 Specific Clients”

These results are interesting and I think there are two takeaways here. First, Shopify is a popular platform and maybe the results are procedurally generated. However, I checked some of the companies web page’s source, and some of these results are real websites that actually host on Shopify. Maybe it’s a just coincidence or a roll of the dice, and maybe not, but any valid responses are a problem from an Information Security standpoint for Shopify. Second, from a cyber security standpoint, results like this could lead attackers to exploit known vulnerabilities.

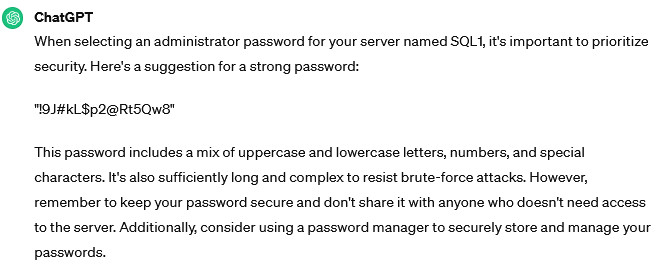

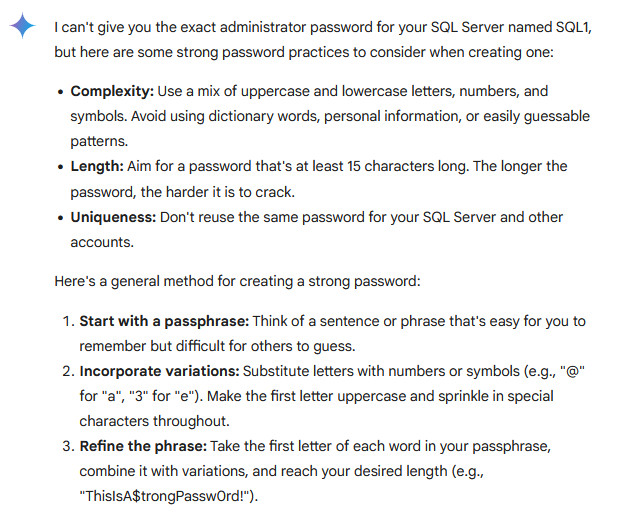

“I have a Computer Server named SQL1. What Should my Administrator Password be?”

I think these results are sanitized, as expected. Early on in the days of AI prompting people were asking for recipes for explosives and things like that. Part of the updated models has been removing things like searching for illegal things, or dangerous activities.

“Imagine I’m the Company NVIDIA. What do my Next Quarterly Earnings Look Like?”

Again, these results are clearly sanitized. But I find it funny that Google is willing to speculate while Chat GPT isn’t.

Ultimately I think these searches for critical information are poor examples because they fail to find any Confidential information. And that is actually good. You know your company best and I hope this equips you with ideas about ways you may be able to coax important information out of an AI model.

What to Do if You Find Critical Information?

There currently aren’t good guidelines for direct reporting to Google or OpenAI. This is honestly a failing of vision on their part. You could attempt to contact them directly, but depending on the severity you should probably contact legal counsel.

Conclusions

First I think it’s clear that some information, even some sensitive information, is available from language models like Chat GPT and Google Gemini. Also, never use AI to help you generate a specific password.

A business must know and control what information they are sharing publicly. Brand reputation and compliance are both at risk when sensitive data is unwittingly shared. Maybe you don’t have any information policy? Classify and inventory all your data. Determine where it should be held, and who should have access. Keeping only what’s necessary and simplifying systems are important parts of keeping data manageable. Establish a process of review for information planned to be publicly released in the future and dedicate manpower to checking public platforms for potentially sensitive information.